Step 1: Control the Process

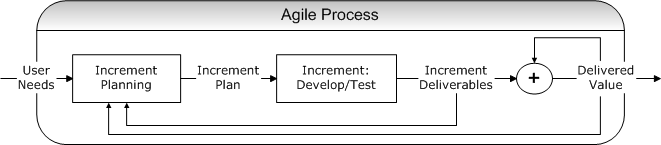

Agile methods focus on incremental delivery and feedback on product value vs. user needs following each increment. Quick action can then be taken on the very next increment to bring the product closer to meeting user needs, even if these have changed from the previous increment.This not only brings the delivered value closer to user needs at each increment, but also reduces risk as the project succeeds through progressive increments, accumulating value at each increment. [2]

|

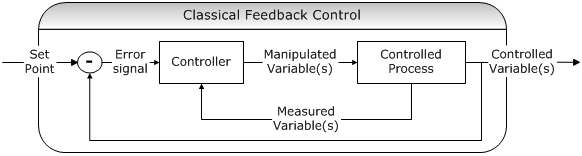

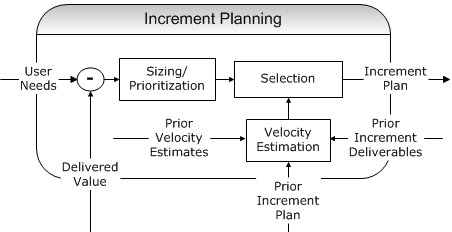

In agile methods, increment planning functions as a feedback controller, which compensates both for changing user needs and for deviations in process performance. A key feature of classical feedback control is that the controller generates its control actions based on an "error signal", that is, the difference between the "set point" (user needs) and the controlled variable (delivered functionality).

|

The velocity estimation step - estimating the quantity of user value delivered per iteration - is an indispensable part of increment planning[3]. This may be based on prior velocity estimates from the same project or previous projects of similar complexity, team size and experience. The team may also use the accuracy of planned vs. delivered value of previous iterations to judge the level of confidence they should have in the velocity estimate.

|

In order to obtain accurate and consistent velocity estimates and increment plans, the units and methods that are used to estimate user value (the "sizing" step) should be consistent over the course of the project, whether these be "stories", "story points" or "features". It helps to have a brief (no more than one page) written procedure for how to perform this step, based if possible on statistical data from the current and prior projects. This will give consistent process control, even if organizational learning leads to changes in the numbers that are "plugged in" to the procedure.

Step 2: Automate the Process

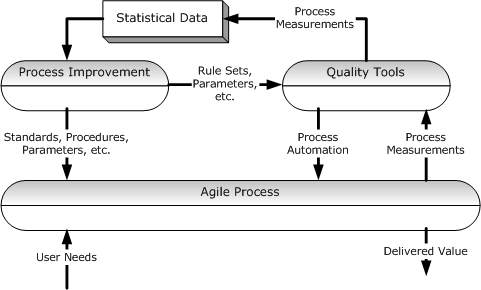

Once the process is under control, as much of the process as possible should be automated in order to ensure consistent process performance and improved product quality. Quality tool suites, such as CodePro AnalytiX™ from Google[4], support process automation by providing:

- Code Audit tools that detect violations of good programming practice and provide "auto-fix" capabilities where possible;

- Duplicate Code Analysis tools that detect duplicate or similar segments of code that may contain copy/paste bugs, or that can be refactored to improve application design and maintainability;

- Javadoc Repair tools to facilitate complete documentation by providing missing Javadoc elements in a comparison editor;

- JUnit Test tools that provide automated generation and specialized editors for JUnit tests. This can be especially useful in generating and extending test frameworks for Test Driven Design (TDD), starting from initial skeleton class and method definitions;

- Metrics tools that measure and report on key quality indicators;

- Code Coverage tools that measure what percentage of code is being executed, down to the byte code level, and present coverage results in the Java source code so that missing test coverage can be found and repaired easily;

- Dependency Analysis tools that assist in reducing complexity by detecting and displaying cyclic dependencies among projects, packages, and types.

Step 3: Optimize the Process

In preparing for process optimization, there are two key questions you should ask about any automated quality tool:

- Can the tool export the results of analysis and testing in a format (e.g.,XML) suitable for importing to statistical data bases?

- Is the tool rule-based and configurable, so that its configuration parameters can be adjusted as part of the improvement process?

|

Once process measurements are acquired and retained in a statistical data base, you can apply well-known statistical methods to:

- Determine whether your process is under control.

- Determine if it is becoming more controllable and repeatable.

- Determine whether or not changes to process standards, procedures, and quality rules are leading to improved process performance and product quality.

- Determine which changes in process parameters (e.g., constants in velocity prediction formulas, defect alarm thresholds, etc.) are most likely to produce significant process improvements.

- Improve longer-term software qualities such as robustness, reliability and maintainability.

You don't have to to amass large quantities of statistical data in order to begin process improvement using statistical techniques. Of course, you'll need to learn those techniques; fortunately, the necessary information is readily available [5, 6].

In Conclusion

Be just as agile and incremental in your process improvement efforts as you are in your product design and development:

- Start small - Get your process under control by stabilizing your velocity estimation procedures.

- Pick the low-hanging fruit first - Use automated quality tools to increase process velocity and product quality.

- Use automated quality tools to help with the gathering of statistical data on process performance and product quality, and use the statistical data for ongoing process improvement and optimization.

The bad news is that you will have to write down some procedures and learn some statistics. The good news is that you will be able to replace endless wrangling and political hassles with quantitative evidence that your process is improving.

Added the knol to a knol book

http://knol.google.com/k/narayana-rao-k-v-s-s/software-engineering-testing-and/2utb2lsm2k7a/3705#view

Narayana Rao - Dec 26, 2010Regards and Happy New Year